In 2025, artificial intelligence has transitioned from an initial layer to a fundamental layer of global infrastructure. To the end-user, the ordinary ability of a model to debug code, or diagnose a rare disease feels like a single spark of intelligence. However, that spark is actually a carefully choreographed sequence of three distinct architectural phases: the Transformer blueprint, the massive scale of Pre-training, and the surgical refinement of Fine-tuning.

To truly understand modern AI is to understand the Rule of Three.If one pillar is weak, the model collapses into incoherence. Below, we break down these three , exploring the math, the data retrieval, and the silicon that make them possible.

By the end of this article, you’ll understand:

- How Transformers process vast amounts of text in parallel.

- How pre-training teaches a model “general intelligence” and world patterns.

- How fine-tuning (post-training) turns a raw model into a useful, safe product.

Table of Contents

ToggleThe Transformer

The Machine That Thinks in Parallel .The Transformer is the physical and mathematical foundation of modern AI. Before its invention in 2017, AI processed language serially reading one word at a time. This was a massive bottleneck; it was slow, and the model would forget the beginning of a sentence by the time it reached the end.

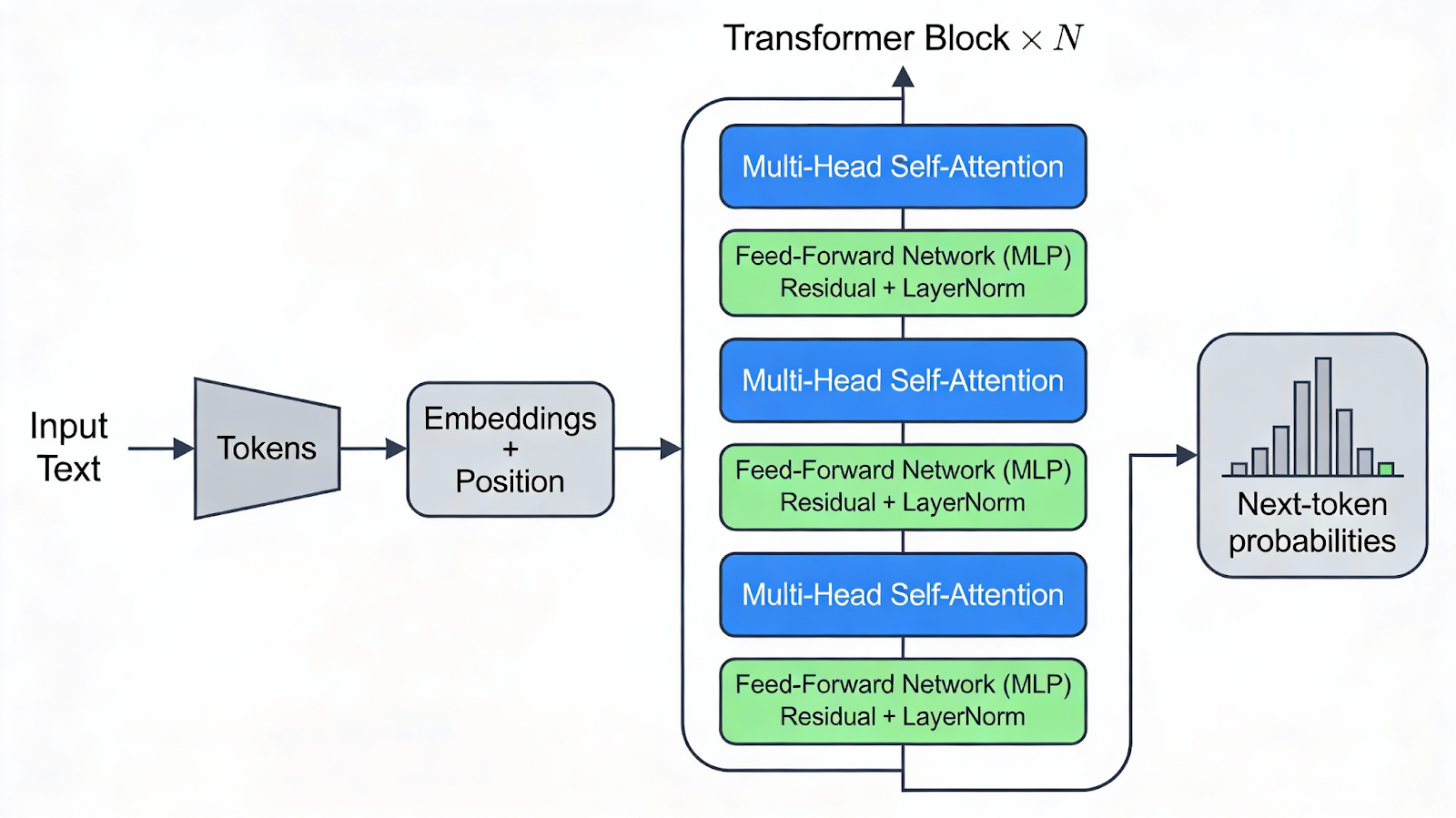

A Simple Transformer Architecture

At a high level, a modern language model is just the same Transformer block repeated many times in a stack. Information flows through it like this:

1.? Tokens ? Embeddings

The input text is split into tokens (small pieces of words) and each token is mapped to a vector called an embedding.

Positional information is added so the model knows the order of tokens, even though it processes them in parallel.

2. Stack of Transformer Blocks

Each block has the same internal structure, and the output of one block becomes the input to the next. Inside a block:

- Multi?Head Self?Attention: Every token looks at every other token and decides how much to “attend” to it, using queries, keys, and values.

- Feed?Forward Network (MLP): A small neural network refines each token’s representation independently after attention.

- Residual Connections + Layer Norm: Shortcut connections and normalization keep training stable and help gradients flow.

3. Output Layer (Next-Token Prediction)

After the last block, a linear layer and soft max turn the final vectors into probabilities over the vocabulary.

The model picks the next token, appends it to the sequence, and repeats this process to generate text step by step.

1. The Multi-Head Attention Mechanism :

The breakthrough of the Transformer was Self-Attention. Instead of reading sequentially, a Transformer views the entire context window (e.g., a whole paragraph) simultaneously.

Queries, Keys, and Values: The model uses a mathematical “lookup” system. Each word is converted into three vectors: a Query (what am I looking for?), a Key (what do I offer?), and a Value (what is my content?).

By calculating the relationship between these, the model determines exactly how much attention to pay to a specific word.

Contextual Weighting: In the sentence “The animal didn’t cross the street because it was too tired,” the Transformer uses attention to realize that “it” refers to the animal.

In the sentence “The animal didn’t cross the street because it was too tired,” the model creates an attention table. The word “it” might assign weights like this:

Animal: 0.7 (High relevance)

Street: 0.2 (Low relevance)

Tired: 0.1 (Contextual modifier)

2. Parallelization and the GPU Revolution:

The Transformer won the “architecture wars” because it is designed for GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units). Unlike a standard CPU that handles one task at a time, a GPU has thousands of cores. Because the Transformer looks at every word in a paragraph at once, these cores can calculate attention weights for thousands of tokens simultaneously. This is the only reason Large Language Models (LLMs) can be trained on trillions of words.

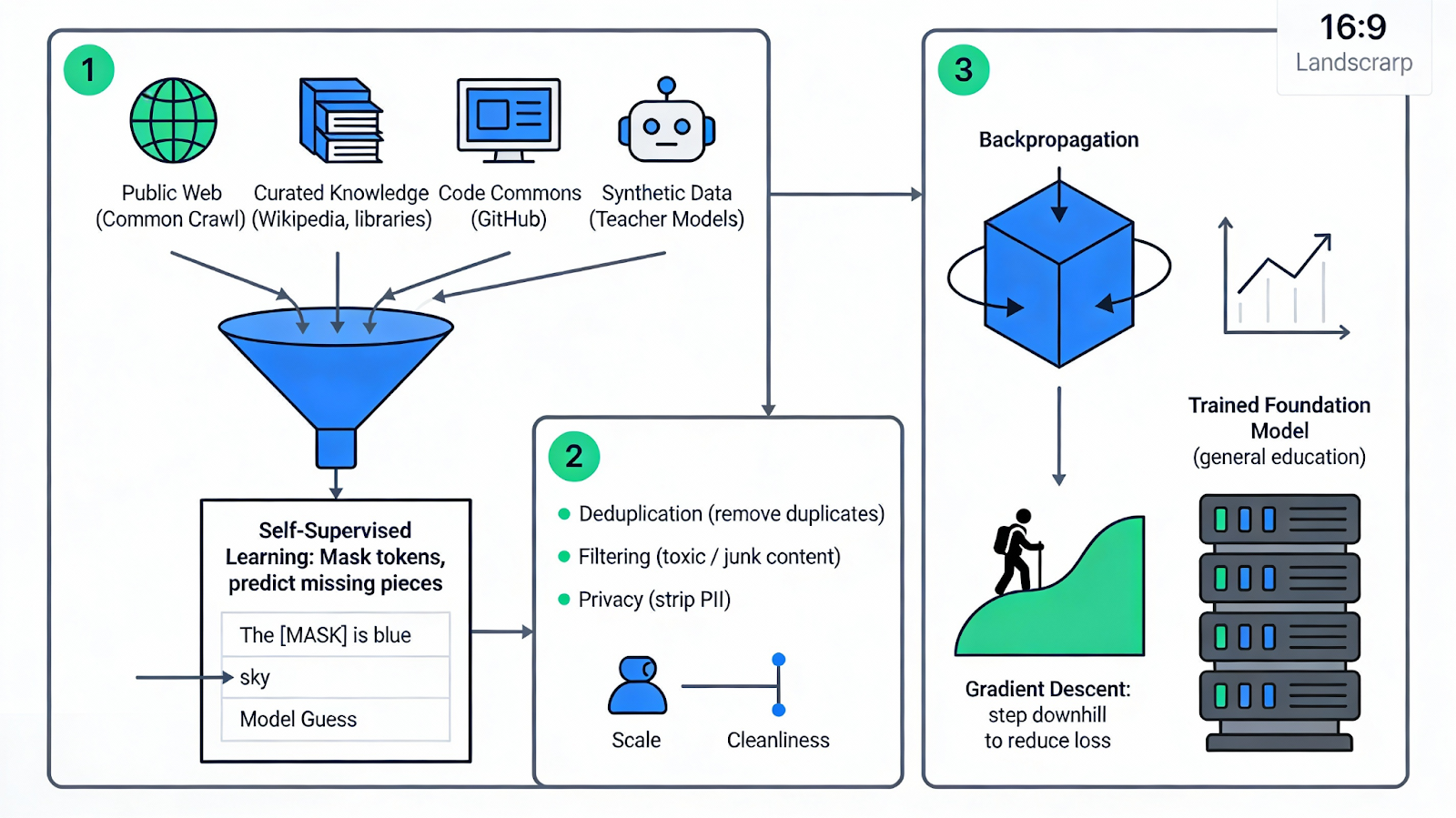

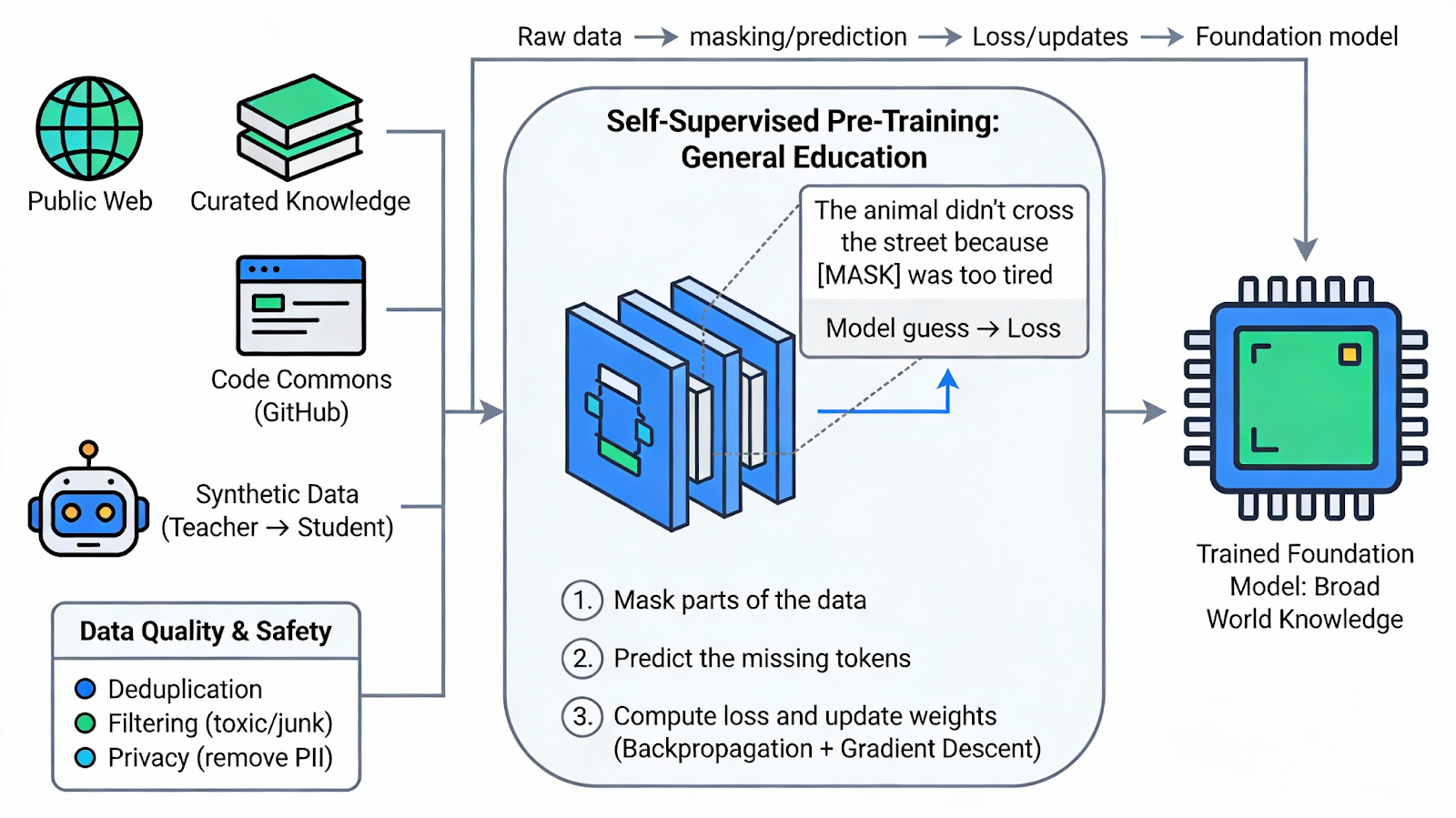

Pre-Training

Building the Foundation .If the Transformer is the engine, Pre-training is the massive fuel tank. This is the stage where a model undergoes a “general education” before it is ever given a specific job.

1. Self-Supervised Learning & Data Retrieval

The most profound shift in AI was the move away from labeled data. We no longer need humans to tell a model “this is a verb.” Instead, we use Self-Supervised Learning. We retrieve massive amounts of data and hide (mask) parts of it, forcing the model to guess the missing pieces.

Where is this data retrieved from?

- The Public Web: Massive archives like Common Crawl containing trillions of pages from the internet.

- Curated Knowledge: High-density factual data from Wikipedia and digitized library archives.

- The Code Commons: Public repositories from GitHub that teach the model logic and structure.

- Synthetic Data: In 2025, we use “Teacher Models” to generate perfectly formatted, logical text to train “Student Models,” ensuring the foundation is built on logic rather than internet noise.

2.Data Quality & Safety:

Retrieving data isn’t just about volume; it’s about cleanliness. Modern pipelines include.

- Deduplication: Removing millions of identical web pages to prevent “memorization.”

- Filtering: Using classifiers to remove toxic, low-quality, or purely “junk” content.

- Privacy: Stripping out PII (Personally Identifiable Information) before training begins.

- The Trade-off: There is a constant tension between scale (getting enough data to learn) and cleanliness (ensuring the data is high-quality).

3. The Mechanics: Backpropagation & Gradient Descent

How does a model actually learn from this data? It uses a mathematical feedback loop

- The Error Check: Every time the model guesses a masked word incorrectly, it calculates the “Loss” (the margin of error).

- Backpropagation: This algorithm travels backward through the layers of the Transformer to identify exactly which internal “knobs” (parameters) were responsible for the mistake.

- Gradient Descent: This is the optimizer. Think of it as a hiker in a fog trying to find the bottom of a valley. It feels the slope of the “error landscape” and takes a step in the steepest downward direction to minimize the mistake

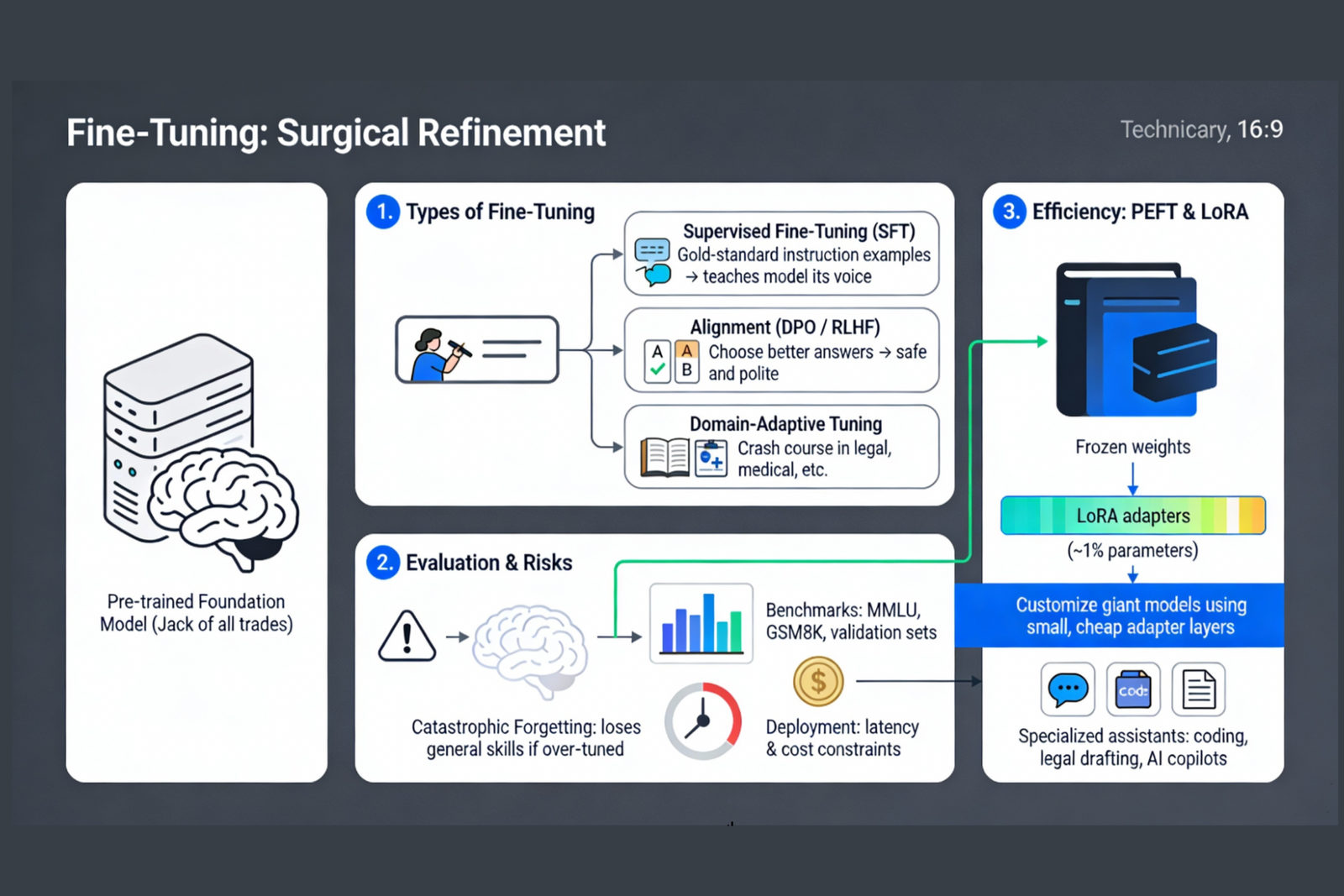

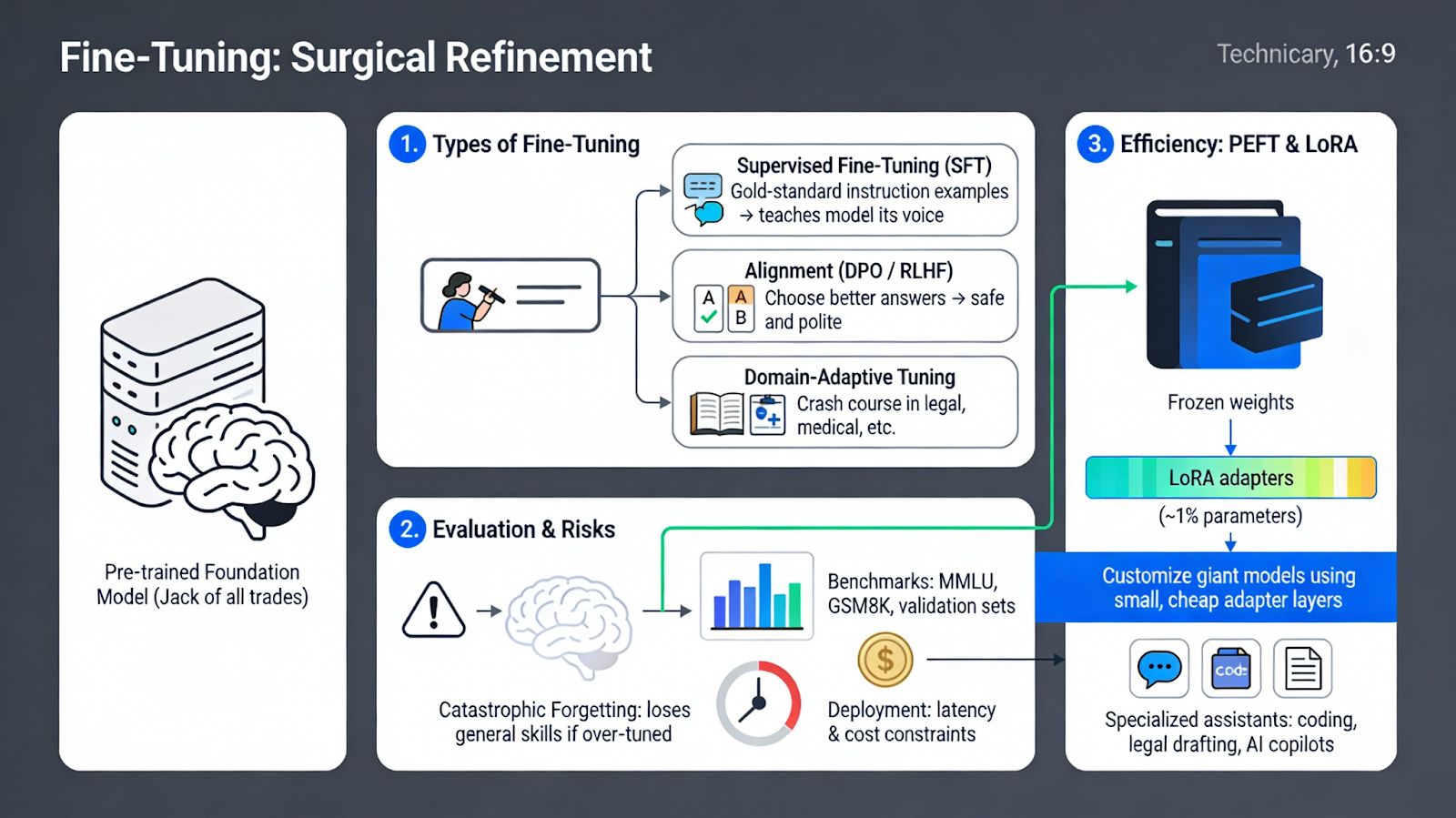

Fine-Tuning

The Surgical Refinement.A pre-trained model is a “Jack of all trades” but a master of none. It might know everything about medicine, but it doesn’t know how to speak to a patient. Fine-tuning (now called Post-Training) is the process of taking that giant, raw brain and teaching it a specific job.

1. Types of Fine-Tuning Processes:

In 2025, we choose the process based on the objective:

Supervised Fine-Tuning (SFT): Humans provide “Gold Standard” examples of how to follow instructions. This teaches the model its “voice.”Alignment (DPO/RLHF): This ensures the model is safe and polite. DPO (Direct Preference Optimization) has become the 2025 standard, allowing models to learn by comparing two answers and choosing the “better” one without needing a complex reward system.

Domain-Adaptive Tuning: Taking a general model and giving it a “crash course” in specialized data, such as legal jargon or medical records retrieved from private corporate databases.

2. Evaluation and Risks

- Catastrophic Forgetting: If you fine-tune a model too aggressively on medical data, it might lose its general ability to write a poem or do basic math.

- Benchmarks: Developers use validation sets (MMLU, GSM8K) to check if fine-tuning actually improved the model or just broke its general reasoning.

- Deployment Constraints: Fine-tuned models must be optimized for latency and cost to be useful in real-world apps.

3. The Efficiency Revolution: PEFT and LoRA

Fine-tuning used to require a $100 million supercomputer. Today, we use Parameter-Efficient Fine-Tuning (PEFT), specifically LoRA (Low-Rank Adaptation). Instead of changing all 70 billion parameters, we “freeze” the pre-trained model and only train a tiny 1% “adapter” layer. This allows a company to customize an AI for a specific task like coding or legal drafting for a fraction of the cost.

Comparing the Pillars

| Feature | Pre-Training | Fine-Tuning |

| Objective | Build General Knowledge (Base Model) | Build Task-Specific Skill (Chatbot/Specialist) |

| Data Volume | Trillions of Tokens (e.g., 2T to 15T) | Thousands of Tokens (e.g., 10k to 100k) |

| Data Retrieval | Scraped Public Web, GitHub, Wikipedia | Private Enterprise Data, Labeled Q&A Pairs |

| Estimated Cost | $10M – $200M+ (Compute & Power) | $10 – $10,000 (using PEFT/LoRA) |

| Hardware | Clusters of thousands of H100 GPUs | Single GPU or small local cluster |

| Timeframe | Months of continuous training | Hours to Days |

Ultimately, the magic of modern AI is not found in any single component, but in the seamless integration of these three layers. The Transformer provides the structural genius that allows for parallel thought; Pre-training builds the vast, generalized understanding of the world; and Fine-tuning shapes that raw potential into a tool that is safe, specific, and usable. As we look toward the future of AI infrastructure, the advancements will likely not come from replacing this “Rule of Three,” but from optimizing the connection between them creating models that are not just knowledgeable, but surgically precise in their application to human needs.