In 2026, we have reached a technological tipping point where unimodal AI is no longer a viable tool, but a relic of a limited past. As the world moves toward deeper integration between digital and physical realities, a model that can only process data through a single lens be it text, image, or audio fails to meet the basic requirements of modern intelligence. We have entered the era of Native Multimodality, where the true value of AI lies in its ability to navigate the world as we do: through a seamless, simultaneous flow of multi-sensory information. Today, intelligence is measured by its capacity to synthesize video, interpret live audio cues, and analyze complex text in a single, unified thought process .In this fast-moving landscape, “sensory isolation” has become a serious liability. If an AI is stuck in just one medium, it’s effectively disconnected from how the real world actually works. It misses the nuance, the body language, and the physical context that we take for granted. In a world that is inherently multi-dimensional, single-mode systems aren’t just limited anymore; they’re obsolete.

Table of Contents

ToggleDefining Unimodal AI: The Era of the Specialist

Unimodal AI processes information in only one form (e.g., text or images). While highly effective in its specific domain (like CNNs for images), it lacks the human-like ability to synthesize multiple information streams, making it unable to handle complex, real-world mixed-media scenarios.

The Anatomy of a Specialist: 4 Key Elements

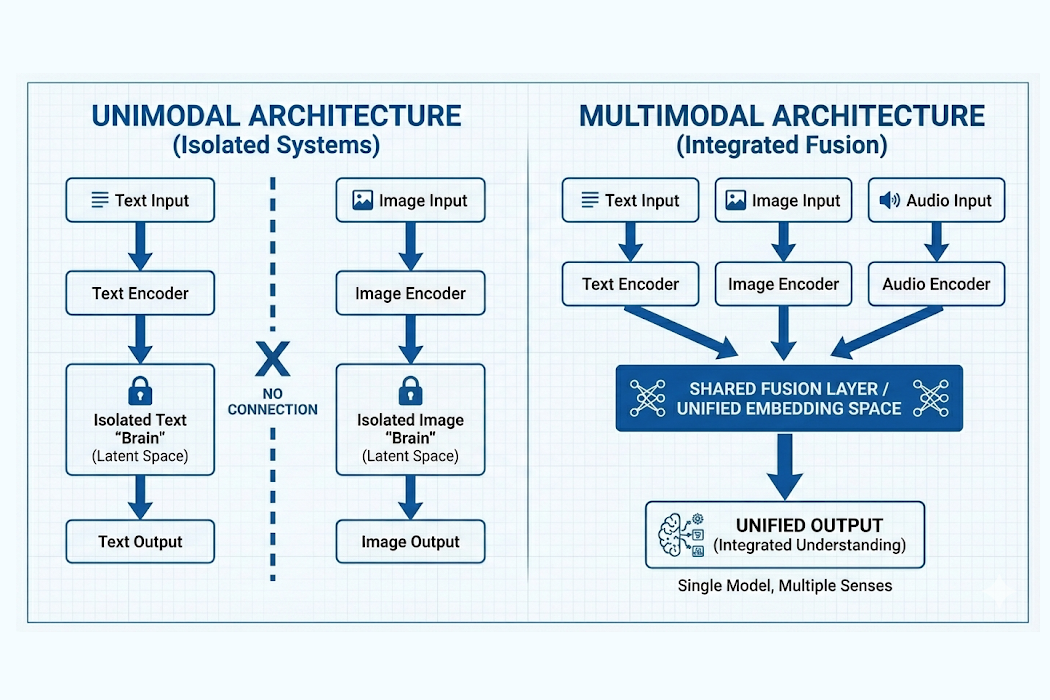

Unimodal systems rely on a “Single-Channel” architecture, creating a specialized but isolated intelligence:

- The Specialized Encoder (Sensory Organ): The gateway hardwired for one data type (e.g., a Transformer for text). It is efficient but “format-locked,” unable to perceive data outside its specific training domain.

- Tokenizers & Feature Extractors (Digital Conversion): Data is shredded into digital tokens (words or pixels). In unimodal systems, these exist in a vacuum; the math for the word “Apple” has no connection to the visual of a red fruit.

- The Latent Space (Knowledge Silo): The AI’s “understanding” lives here in a Vector Vacuum. Lacking a “shared embedding,” it cannot cross-reference or translate sounds into sights.

- The Task-Specific Head (Output): The final layer is optimized for one narrow goal (e.g., classifying a cat). It lacks flexibility and fails when tasks require multi-sensory logic.

The Trade-off of Specialization

Advantages:

- Precision: High accuracy in controlled, single-format environments (e.g., text analysis).

- Efficiency: significantly lower compute and memory costs compared to multimodal systems.

Disadvantages:

- Contextual Blindness: It misses the “big picture.” A voice-only AI hears words but misses the sarcasm or body language that changes meaning.

- Brittleness: Prone to hallucinations because it cannot verify data against other senses (e.g., checking text against an image).

The “Dead End” of Unimodal Systems

While useful for legacy tasks like email sorting, Unimodal AI has hit a ceiling. These components suffer from Sensory Isolation they lack interoperability. You cannot simply plug a video input into a text encoder; you must build a new system from scratch. In 2026, solving “Common Sense” requires the intersection of senses, not just more text.

The New Standard: What is Multimodal AI?

The AI modals have so much potential than just doing one task at a time but its beyond that it can process, reason, and learn from diverse data streams such as text, images, audio, and video simultaneously .It can link one data type to another.

Evolution: How does the term Multimodal come into the fray?

This transition, from the unimodal to the multimodal, did not happen overnight but was an evolutionary process in itself-a three-tiered evolution-because “common sense” was needed in machines:

Phase 1: The Modular Era – 2020 to 2022 This is where we take a text model, say GPT-3, and “duct tape” it to an image model, say DALL-E. Two different brains that just try to talk with each other; pretty clunky with plenty of errors.

Phase 2: The Alignment Era, 2023-2024: Researchers started “aligning” various models. That is, we taught the AI that the word “dog” and the picture of a dog are supposed to have similar mathematical coordinates. That gave us the very first taste of “seeing” AI.

Phase 3: The Native Era, 2025-2026: We have now reached the era of Native Multimodality. Models are no longer “combined”; they are native multimodal. They are trained on video, text, and audio from their very first second. This will give them “Embodied Intelligence”, an understanding of the world similar to our own.

The Key Components: The Anatomy of Fusion

The magic of multimodality happens under the hood through sophisticated Fusion Strategies. Unlike the rigid silos of unimodal systems, these components are built to “talk” to each other at a deep semantic level:

- Cross-Modal Encoders: Specialized “sensory organs” that don’t just process their own data but are aware of what other encoders are seeing.

- Cross-Attention Mechanisms: Often housed within Transformer blocks, these allow the AI to “attend” to specific parts of an image that correspond to specific words in a text .

- Joint Embedding Space: A shared mathematical “map” where the sound of a bell, the word “bell,” and the image of a bell all sit in the exact same location. This is how the AI resolves ambiguity that would confuse a single-mode system .

- Adaptive Gating: A cutting-edge frontier where the AI dynamically decides which “sense” is most important for a specific task like an autonomous car prioritizing “audio” (a siren) over “vision” (heavy fog) in real-time.

The Multimodal Advantage: Why It Wins Every Time

- Contextual Robustness: Multimodal AI excels at disambiguation. Where a single image might have multiple interpretations, the AI uses “complementary information” from audio or text to find the truth.

- Nuanced Understanding: By learning from sight and sound together, the AI forms a richer, more nuanced worldview that unimodal models simply cannot replicate .

- Higher Accuracy in High-Stakes Tasks: In complex fields like medicine, it identifies subtle correlations such as linking a pixel in an MRI to a specific genetic marker that a single modality would miss.

Real-World Use Cases: Beyond the Chatbot

To understand why multimodality is already making single-mode AI obsolete, look at how it is being applied in 2026:

1. Clinical Diagnostics (The “Triple-Threat” Scan)

A unimodal model might detect a tumor in an MRI, but a multimodal system integrates that scan with patient history, genetic markers, and pathology reports. The result is a profoundly more accurate and comprehensive diagnosis that considers the “whole patient” .

2. Embodied Robotics

In manufacturing, a multimodal robot doesn’t just “see” a part on a conveyor belt. It hears the vibration of the machine, senses the temperature of the engine, and reads the digital manual allowing it to interact with the physical world with an understanding approaching our own.

3. Intelligent Content Moderation

Social media platforms now use multimodality to catch harmful content that text-only models miss. By “watching” the video and “listening” to the tone of voice while reading the transcript, the AI can detect sarcasm, bullying, or misinformation with 90%+ accuracy.

4. Adaptive Education

AI tutors now “watch” a student’s facial expressions for signs of confusion while “hearing” the hesitation in their voice as they explain a math problem, allowing the tutor to provide instant, personalized support.

The Architecture Face-Off: How They Actually Work

To understand why one is replacing the other, we have to look at their internal “brain” structure.

Unimodal AI works through sequential isolation. Visualize a factory with three distinct buildings, one for text, one for photos, and one for sound. If it needs to understand a video, for example, the “Photo Building” must describe it in a memo and send that memo to the “Text Building.” By the time it arrives, the nuance is lost, and the process is slow.

Multimodal AI works through Semantic Fusion, which is actually one huge building where all experts sit at the same table. As information comes in (be it a pixel or a sound wave), it gets converted to one Shared Embedding Space; therein, this mathematical “conference room” realizes that the view of a burning candle and the rising temperature of a heat sensor are one and the same event; it need not “send a memo”; it perceives reality all at once.

Addressing the Gaps: Why Multimodal Is the Only Way Out

Multimodality is more than a “better version” of AI; rather, it is a key to resolving structural gaps within unimodal systems, which made them problematic to adopt in critical scenarios:

The Disambiguation Gap: “A unimodal system typically gets ‘confused’ when it hears a word that can have many meanings, such as ‘bank,’ which can be a river or an architectural structure.” Multimodal AI relies on vision or context to automatically disambiguate such a word.

The Reliability Gap: Because it picks out “subtle correlations” between various kinds of data in this case, a cough (audio) and an X-ray image the multimodal AI system provides a “safety net” proof. The system is 35% less likely to have hallucinations because it now has “eyes” to check its “thoughts.”

The Interaction Gap: The future is no longer about writing things down inside a box. It’s about having a Context-Sensitive AI that would be able to see your hand movements, listen to your voice, and recognize the surrounding environment you are in.

The Great Shift: Unimodal vs. Multimodal AI

| Feature | Unimodal AI (The Past) | Multimodal AI (The Future) |

| Data Scope | Single Source: Processes only one type of data (e.g., only text or only images). | Multi-Source: Simultaneously integrates text, images, video, audio, and sensor data. |

| Contextual Awareness | Fragmented: Lacks the “big picture.” Cannot connect a sound to an image without manual intervention. | Holistic: Understands the “why” by cross-referencing multiple senses (e.g., tone of voice + facial expression). |

| Accuracy & Reliability | Siloed: Prone to higher hallucination rates because it cannot “fact-check” its data against other senses. | Robust: Uses “Cross-Modal Verification” to reduce errors and disambiguate complex situations. |

| User Interaction | Static: Usually limited to typing or specific voice commands. | Intuitive: Supports natural interactions through gestures, vision, speech, and touch. |

| Processing Speed | High Latency (Chained): Requires “translating” data between different specialized models. | Low Latency (Native): Processes all inputs in a single, unified “Semantic Space” for real-time response. |

| Resource Demand | Low to Moderate: Simpler architecture and lower computational overhead. | High: Requires significant GPU power and complex “Fusion” infrastructure to run. |

| Typical 2026 Outcome | Strategic Liability: Often creates disconnected data silos and “blind” automation. | Strategic Asset: Enables “Embodied Intelligence” and proactive, context-aware agents. |

We are witnessing the end of “sensory isolation” in artificial intelligence. The shift from unimodal to multimodal systems is a fundamental restructuring of how machines perceive reality, moving from processing data in a vacuum to synthesizing it like a human mind. As we advance through 2026, relying on single-channel models is akin to operating with a digital blindfold. The future belongs to native multimodal agents that don’t just process data, but finally understand it through the seamless fusion of sight, sound, and language. The era of the fragmented model is over; the age of the unified mind has begun.