Table of Contents

ToggleWhat are transformers?

Transformers are basically a type of an artificial neural network architecture they don’t think like a human brain but predict patterns. When we make conversations with LLMs like Chat gpt , Gemini , Microsoft copilot etc it feels like we are actually talking to a human but it only mimics and makes it sound intelligent and emotional . But it doesn’t understand anything like we do. It is a model architecture that changed AI forever after it was founded in 2017 and made all the LLMs so powerful. It is the heart of LLMs. It brought in a big revolution .

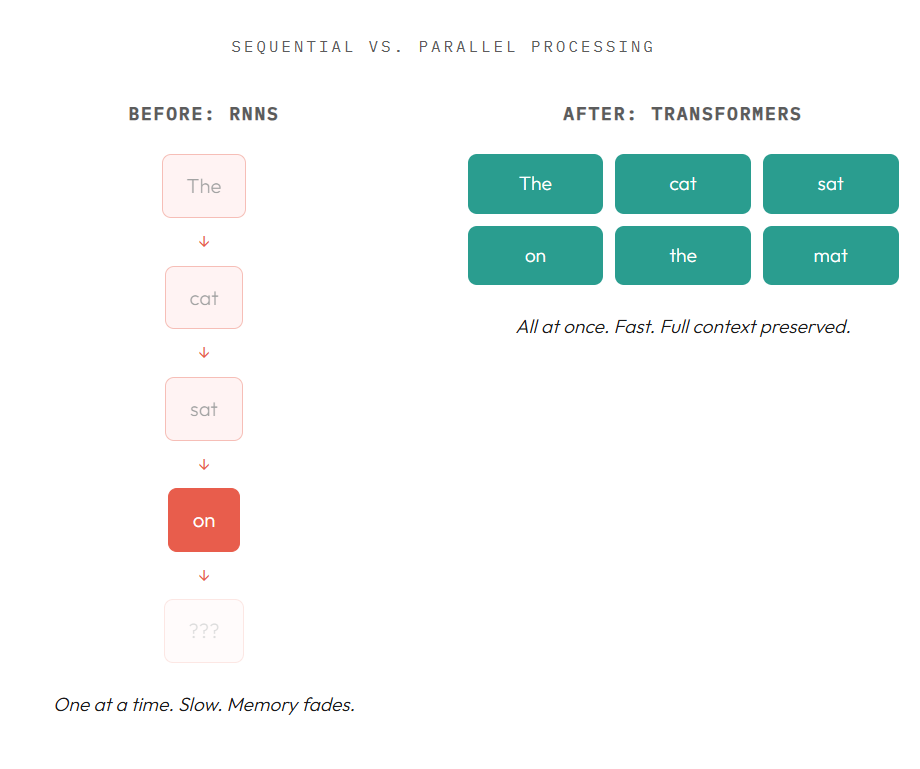

Before Transformers we depended on RNNs and LSTM .RNNs stand for recurrent neural network and LSTM stands for long short term memory . These models used to read text sequentially one word at a time which took a lot of time for it to process it which later on became a huge obstacle and couldn’t handle big sentences and would end up forgetting the earlier context . example

We cannot train them parallely and are extremely hard to work with big data due to their speed. It’s like reading a huge paragraph and once you reach the end of it forgetting the first few lines.Thus previous models were very time consuming and very small compared to GPT level models in the present.

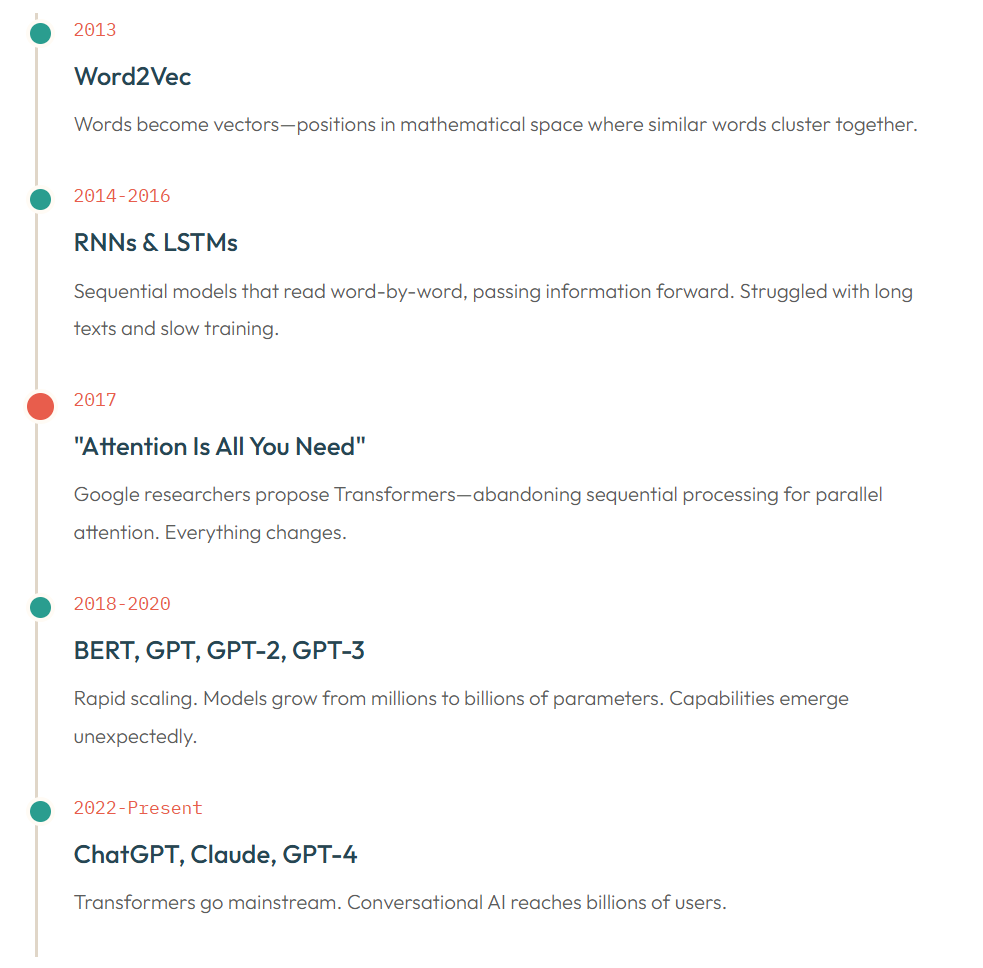

A Brief History : How We Got Here

How are transformers different from RNNs and LSTM?

The transformers do not read a line simply from left to right or one word at a time but they parallely read everything at once like for example its done reading a book at once and then decides which part is important and which is less this speeds up the training process which makes it efficient to a whole other level. That’s why current models are trained on billions and trillions of words.

Transformers= parallel processing + attention

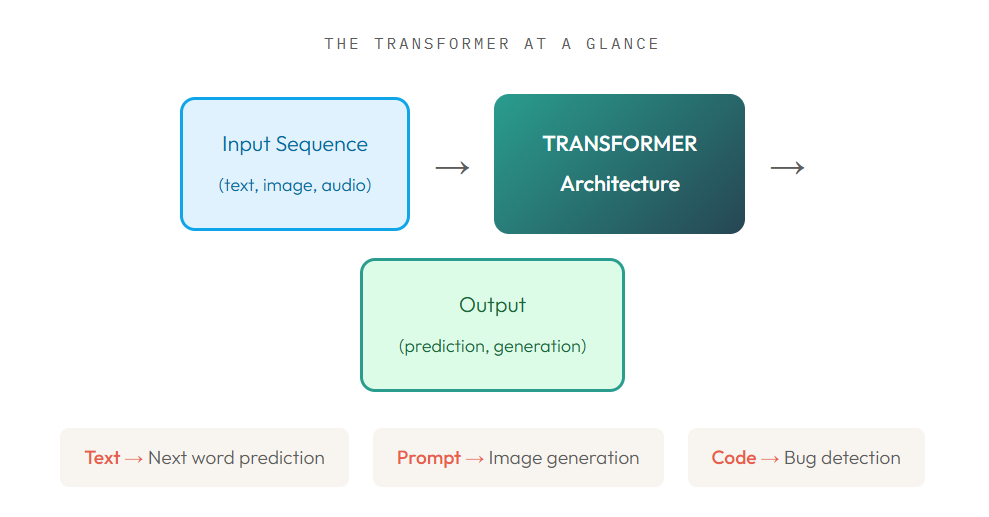

Basic structure of Transformers

A transformer is basically made of repeating blocks where each block does two main jobs. The first one looks at all the words which is attention and the second one is feed forward projection ie. transform the information.When we stack 24 of such types of layers we get a model close to GPT 2 and if stack 96+ layers then we get a model very similar to GPT 4 , scaled models, with each layer your output is refined it’s like passing a thought through many smart editors.

Single Transformer Layer

It consists of three main steps

Step 1: The model reads all the tokens at once which are embeddings , every word here becomes a vector, a point and meaning map.

Step 2 : Attention decides what each word should focus on, for example in a sentence if there is the pronoun “She” it will connect to a girl’s name “Shreya” and “It” will connect to a “phone”.

It connects the relationship of words in a sentence so there is no confusion.

Step 3: Feed forward transformation and it enhances meaning.

After the previous steps connect with meaning the feed forward network refines it even more properly like making a rough document into a final polished document.

Multi head attention

It does not use a single attention mechanism but uses multiple heads all focusing on different things . If one head is focusing on grammar of a sentence ,one focuses on spelling of the word , on correlation between words in a sentence and others on coherence, styles , semantics , long range meaning etc. its like having the sentence being examined 25 – 32 times from every angle at the same time, and then it is analyzed and a perfectly crafted is given out. Multiple-head attention means multiple perspectives.

Inside the Transformer: Step by Step

Now let’s look at how Transformers actually work, building from inputs to outputs.

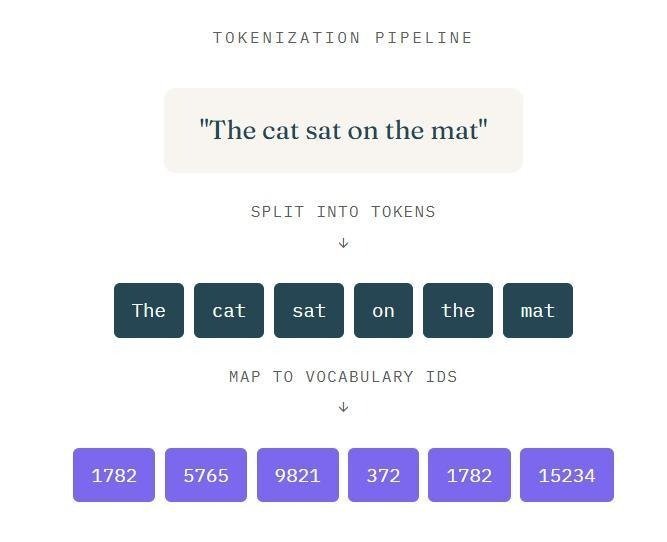

Step 1: Tokenization Breaking Text into Pieces

Computers work with numbers, not words. Tokenization breaks text into small pieces (tokens) and assigns each a unique ID. Tokens aren’t always complete words. “unhappiness” might become [“un”, “happi”, “ness”].Modern models use vocabularies of 30,000-100,000 tokens, carefully designed to balance efficiency with coverage of rare words and multiple languages.

Step 2: Embeddings Words as Coordinates

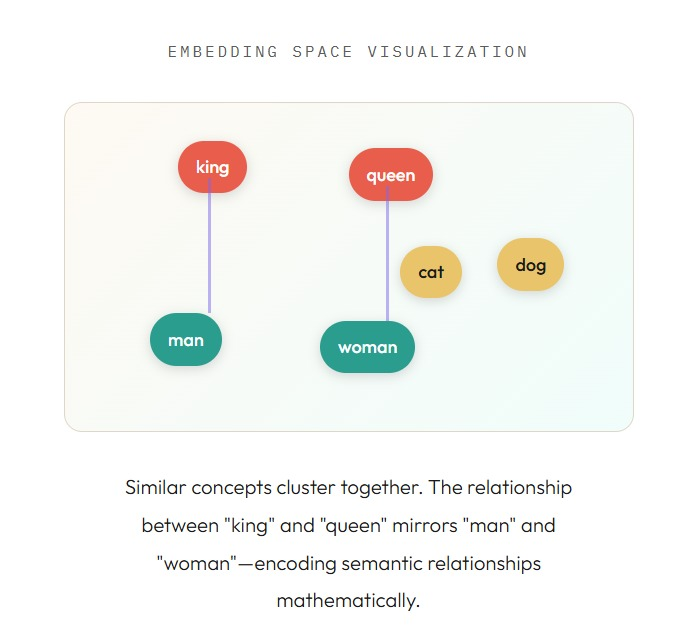

A single ID number tells you nothing about meaning. Embeddings expand each token into a rich vector of hundreds or thousands of numbers that position the word in a “meaning space.”

Think of embeddings like GPS coordinates for meaning. “Coffee” and “espresso” have nearby coordinates. “Coffee” and “democracy” are far apart. The model learns these positions by observing which words appear in similar contexts across billions of sentences.

Step 3: Attention-The Core Mechanism

Here’s the breakthrough. Self-attention lets each word look at every other word and calculate relevance scores. Which words should I focus on to understand this word?

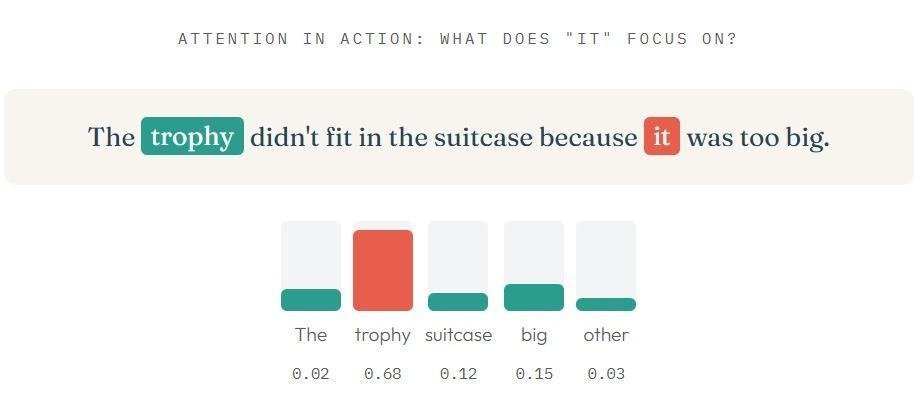

Consider: “The trophy didn’t fit in the suitcase because it was too big.”

What does “it” refer to? The trophy (too big to fit) or the suitcase (too small to contain)? Attention learns to assign high weight to “trophy” when processing it.”

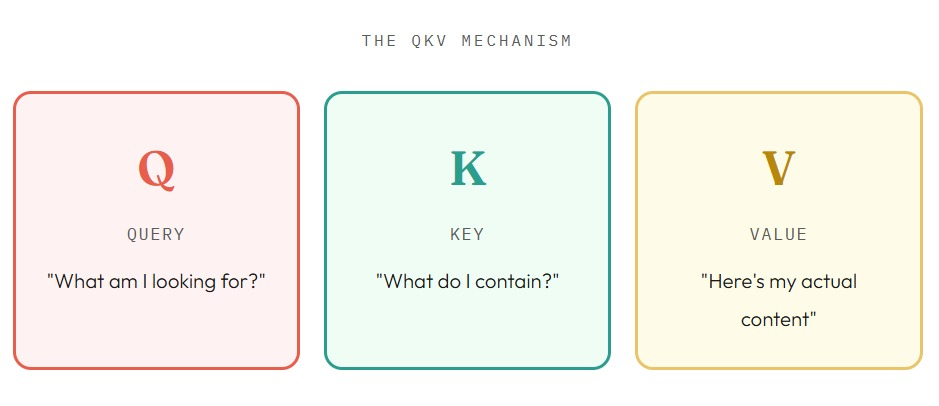

Attention computes these weights using three learned transformations: Query, Key, and Value.

Your Query is your research question. Each book’s Key is its catalog entry keywords describing contents. The Value is the actual text. You match your query against keys to find relevant books, then extract information from the matches.

Step 4 :Stacking – Building Deep Understanding

In machine learning, stacking is an ensemble learning technique that combines predictions from multiple models (base models) to improve overall accuracy. It operates in two layers:

Base Models (Level-0): These models are trained on the original dataset and make predictions independently. Examples include Decision Trees, Logistic Regression, and Random Forests.

Meta-Model (Level-1): This model takes the predictions of the base models as input and learns how to combine them to make the final prediction. Common meta-models include Linear Regression or Logistic Regression.

The process involves training base models, generating predictions, and then training the meta-model on these predictions to optimize the final output. Stacking is particularly effective in improving performance, reducing overfitting, and leveraging the strengths of diverse models.

When we stack many transformer layers one after the other

First layer:learns spelling patterns ,Second : grammar , then Third :sentence formation, then the later layer : concepts and so on. If we go on stacking layers from 50-60 layers the understanding of that model increases and the deepest layer gets the context and the intent . This is why LLMs like Claude , Chat GPT ,Gemini can explain the code we give, summarize our textbooks , write essays or draft emails for us because they have passed many such layers to get to our desired output.

Why Transformers Dominated AI

Transformers took over the world of AI because they solved the limitations of older models (like RNNs and LSTMs) by using attention mechanisms to process all words in a sequence simultaneously. This made them far more efficient, scalable, and adaptable powering breakthroughs in language, vision, audio, and multimodal AI.

Catalyst for the AI Boom: The 2017 paper “Attention Is All You Need” is considered a landmark moment. It didn’t just improve translation, it unlocked the foundation for generative AI, question answering, and today’s agentic systems.

Parallel Processing: Unlike RNNs that read text word by word, Transformers analyze entire sequences at once. This parallelism makes training faster and more efficient.

Attention Mechanism: The core innovation of attention lets models focus on the most relevant parts of input data. For example, in a sentence, the model can directly connect “dog” with “barking” even if they’re far apart.

Scalability: Transformers scale beautifully. Adding more layers and parameters leads to deeper understanding without the bottlenecks of older architectures. This is why they underpin massive models like GPT?4, Claude, Gemini, and Llama.

Versatility Across Domains: Initially designed for machine translation, Transformers quickly proved effective in NLP, computer vision, audio, and multimodal tasks. Their adaptability made them the universal architecture for modern AI.